Algorithms have, over the years, helped mathematicians/scientists solve numerous fundamental operations. From the early use of simple algorithms by Egyptian, Greek, and Persian mathematicians to the shift towards more robust AI-enabled algorithms, their evolution has manifested incredible progress in the technological realm. While Artificial Intelligence (AI) and Machine Learning (ML) are extending their reach and contributions in various military and civilian domains, it is interesting to witness the application of the technology on itself, i.e., using ML to improve the effectiveness of its underlying algorithms.

Despite the increased familiarisation with algorithms over time, it remains fairly strenuous to find new algorithms that can prove reliable and accurate. Interestingly, ‘Discovering faster matrix multiplication algorithms with reinforcement learning,’ a recent study by DeepMind, a British AI subsidiary in London, published in Nature, has demonstrated some interesting findings in this regard. It revealed new shortcuts simulated by AI for faster mathematical calculations vis-à-vis matrix multiplication.

DeepMind developed an AI system called ‘AlphaTensor’, to expedite matrix multiplication. Matrix multiplication – which uses two grids of numbers multiplied together – is a simple algebraic expression often taught in high school. However, its ubiquitous use in the digital world and computing has considerable influence.

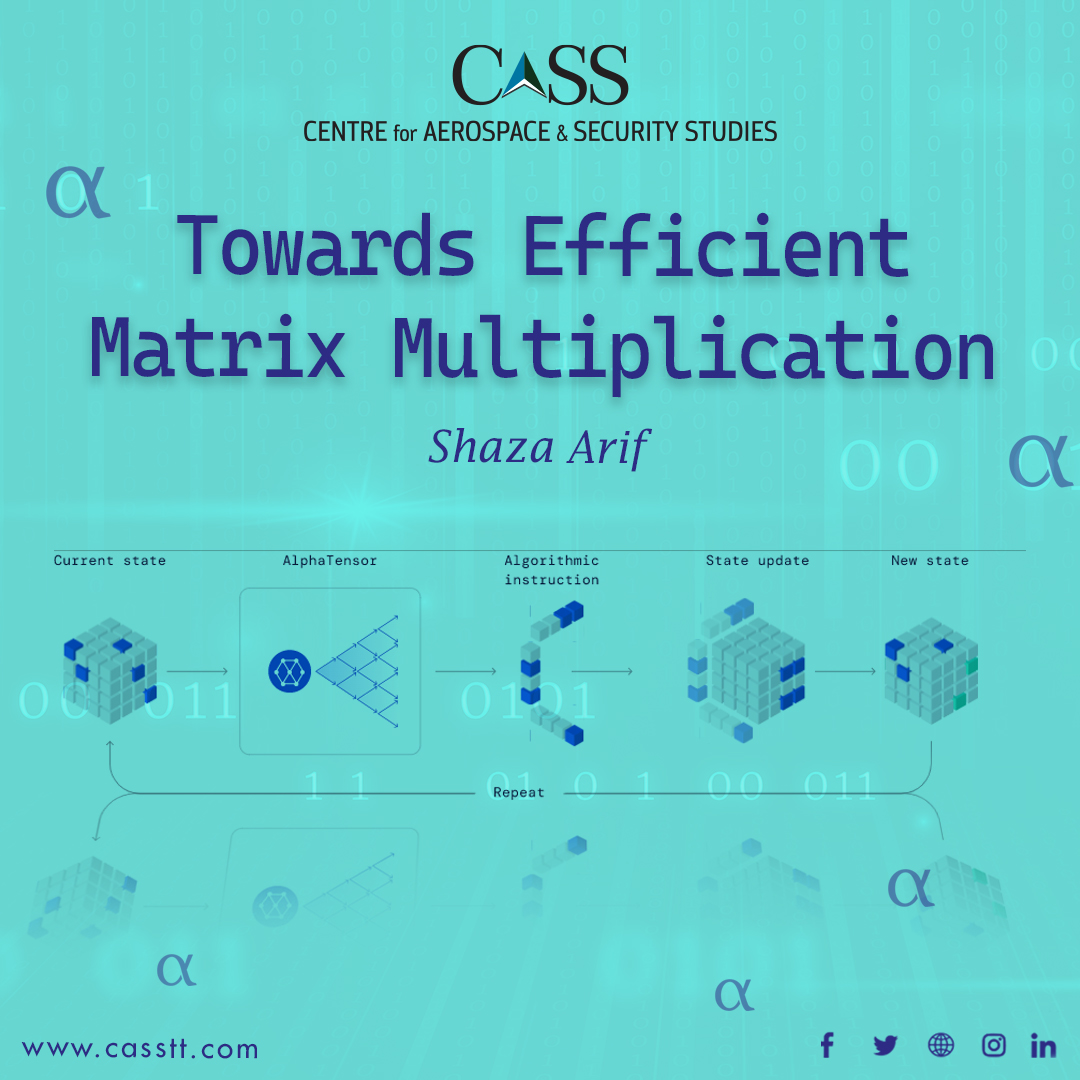

‘AlphaTensor’ was tasked with creating novel, correct, and efficient algorithms to carry out matrix multiplication with the least number of steps possible. The algorithm discovery process was treated as a single-player game. It used AlphaZero – the same AI agent which gained global traction when it displayed extraordinary intelligence in board games like Chess and Go.

AlphaTensor conceptualised the board into a 3-D array of numbers which, through a limited number of moves, tried to find the correct multiplication algorithms. It uses reinforcement learning, where the neural networks interact with the environment toward a specific goal. If the results are favourable, the internal parameters are updated. It also uses Tree Search, in which the ML explores the results of branching possibilities to choose the next action. It seeks to identify the most promising action at each step. The outcomes are used to sharpen neural networks, further helping the tree search, and providing more successes to learn from.

As per the paper’s findings, AlphaTensor discovered thousands of algorithms for various sizes for multiplication matrices, some of which were able to break decades-long computational efficiency records of the previously existing algorithms. They overshadowed the towering complexity of the best-known Strassen’s two-level algorithm for multiplying matrix. For example, AlphaTensor found an algorithm for solving a 4 x 4 matrice in 47 steps overperforming the Strassen algorithm, which used 49 steps for the same operation. Similarly, if a set of matrices was solved using 80 multiplication steps, AlphaTensor reduced it to only 76 steps. This development has caused quite a stir in the tech world as it is being claimed that a fifty-year old record has been broken in Computer Science.

However, the episode underlines some important implications. Given that matrix multiplication is a core component of the digital world, companies around the world have invested considerable time and resources in computer hardware for matrix multiplication. Since it is used across a wide range of domains, including computing, processing images, generating graphics, running simulations, digital communication, and neural networks etc. – to name a few, even minor improvements in matrix multiplication’s efficiency could have a notable and widespread impact in the concerned fields.

The findings manifest the potential of ML to solve even more complicated mathematical problems. The automatic discovery of algorithms via ML offers new capacities to surpass the existing best human-designed algorithms. It introduces new ML techniques, which have the potential to increase computing speed by 20 percent leading to much more feasible timelines. It is pertinent to mention that a lesser number of operations lead to not only lesser time but also less amount of energy spent.

The finding has presented a model to gamify ML to solve mathematical operations. It exhibited that AlphaZero is a potent algorithm that could be used beyond winning traditional games and be applied to solving complex mathematical operations/tasks.

This DeepMind discovery can pave the way for future research on understanding matrix multiplication algorithms and be an inspiration to use AI for algorithm discovery for other computing tasks and set the stage for a possible breakthrough in the field.

The increased efficiency of matrix multiplication has once again brought into light the ever-expanding potential of AI. To be fair, such developments do not infer that human programmers would be out of the job soon; rather, at least for now, it should be seen as an addition of an optimisation tool in the coder’s arsenal, which could lead to more innovative discoveries in the future with remarkable implications for the world.

The writer is a Research Assistant at the Centre for Aerospace & Security Studies (CASS), Islamabad, Pakistan. The article was first published in Modern Diplomacy. She can be reached at: cass.thinkers@casstt.com